Welcome to Neuromorphic Interactive System Lab @ HKUST

NISL focuses on the frontier researhes in the area of neuromophic engineering, such as reinforcement learning of visumotor system of robotics, intelligent brain-computer interface, computational gaze prediction system. Our research is driven by the fundamental theoretical study of neurosience, combined with big data and machine learning, and applied to real-world applications.

NISLGaze Dataset

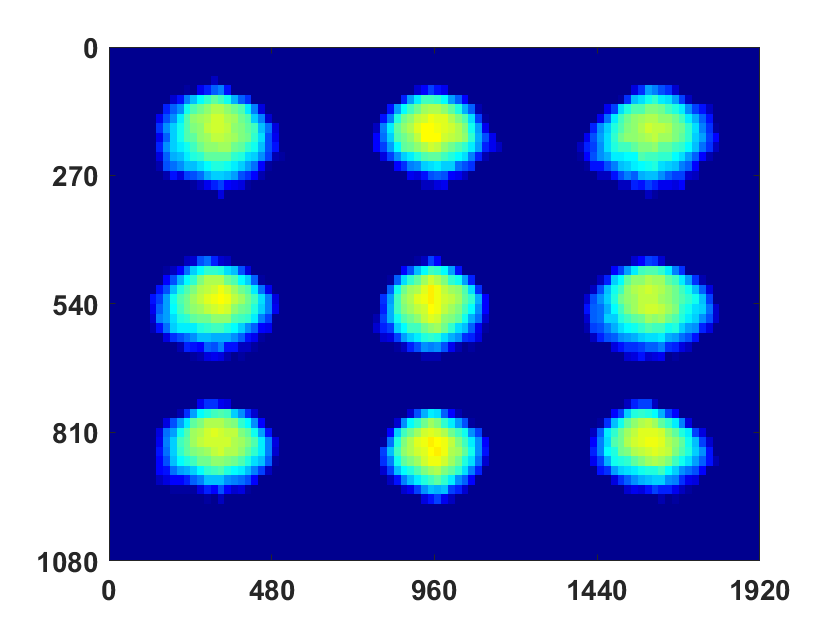

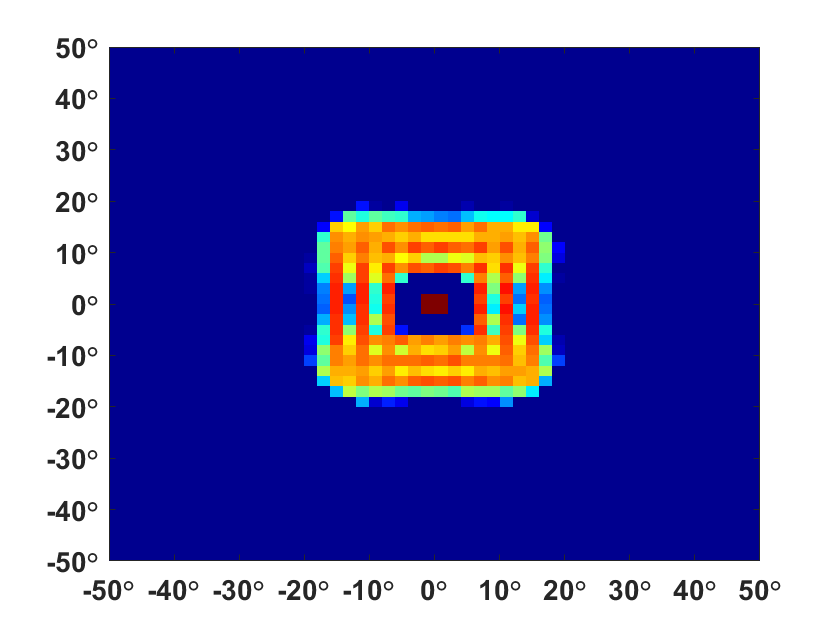

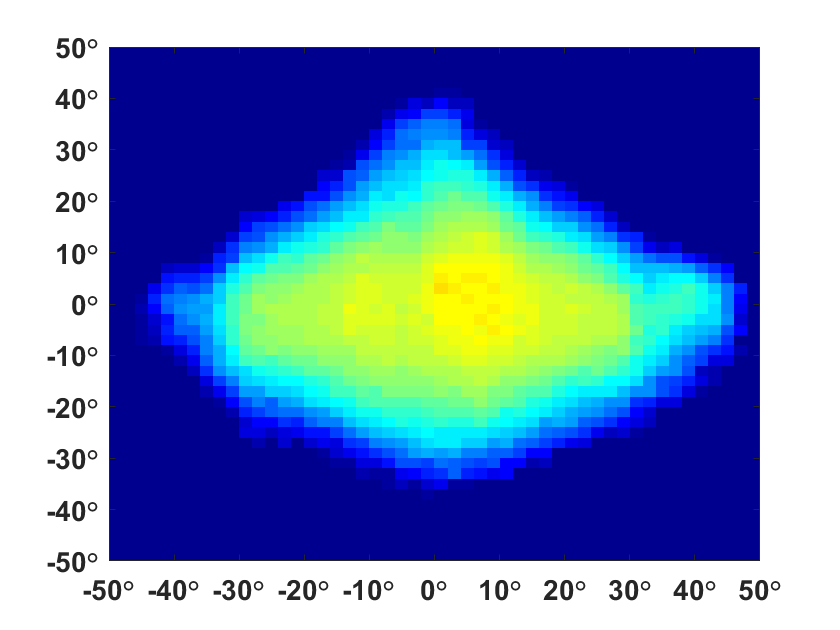

NISLGaze dataset is a large-scale gaze dataset for appearance-based gaze estimation which contains 21 subjects (10 femails, 11 with glasses). It includes large variability in head pose and subject location in a realistic setting.

|

|

|

| Distribution of face location | Distribution of gaze direction | Distribution of head pose |

1. Description

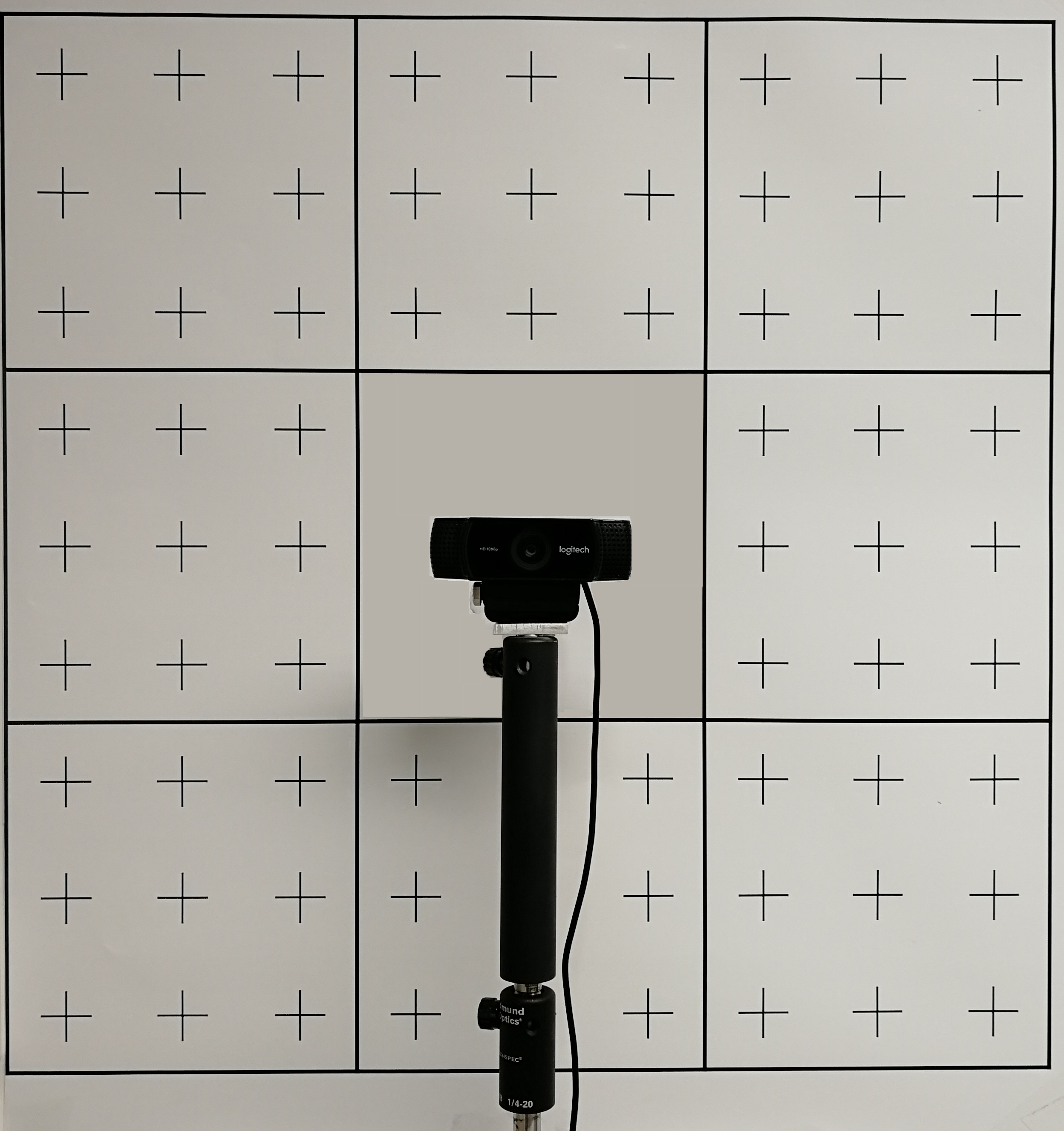

The data collection was divided into nine sessions. During each session, the head location was fixed. The subject was instructed to gaze at the nine gaze blocks sequentially. The central block was gaze at three times while the others were gazed at only once. When the subject was asked to gaze at the central block, s/he was instructed to look at the camera; When s/he was asked to gaze at the other eight blocks, s/he was instructed to look at each of the crosses one by one. Transition between gaze targets were signaled by a notification sound. The subject was instructed to rotate his/her head freely. We recorded one video for each gaze block, which lasted for about 30s. This collection procedure yielded 2,079 videos (11 videos/location × 9 locations/subject × 21 subjects = 2,079 videos. For each subject, the dataset collection took from one to three days. For most subjects (15), data collection spanned three days (three sessions per day).

|

|

|

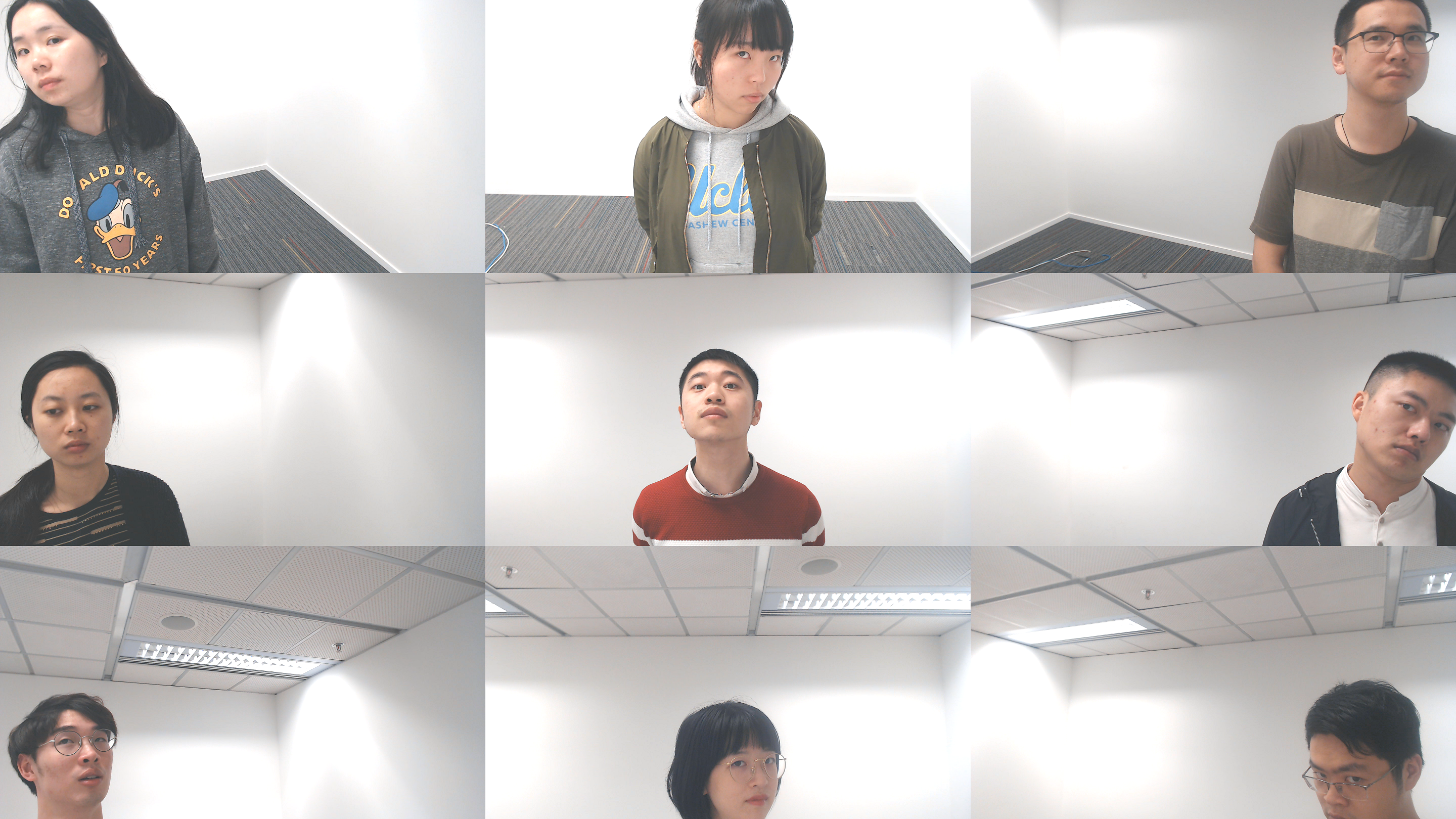

| Nine face locations in the images | Gaze target at each location |

2. Processing

We labeled our dataset by assuming that the center of subjects' eyes were at a fixed location in space. Strictly speaking, this is not true, since subjects were rotating their heads during the data collection. However, deviations due to this rotation were small. To be specific, we created a right-handed coordinate system whose origin was located at at the center of the gaze targets. The z-axis pointed in towards the wall. The y-axis pointed up. We assumed that the subjects' eyes were fixed at (0,height of eye - 160 cm,-90 cm). The locations of the crosses on the wall were in the form of (x, y, 0). The distance between two adjacent crosses is 6 cm. The camera was at (0, 0, -9.5 cm).

We sampled images from the videos at 10 fps. We used OpenFace [1] to extract the facial landmarks. We then removed images on which the landmark detector failed or not both eyes were detected. We also removed images during blink based on the openness of the eyes (termed eye aspect ratio) which is calculated from the detected facial landmarks [2]. The NISLGaze dataset contains $496,695$ images in total (about 2,500 images for each subject in each head location).

3. Normalization

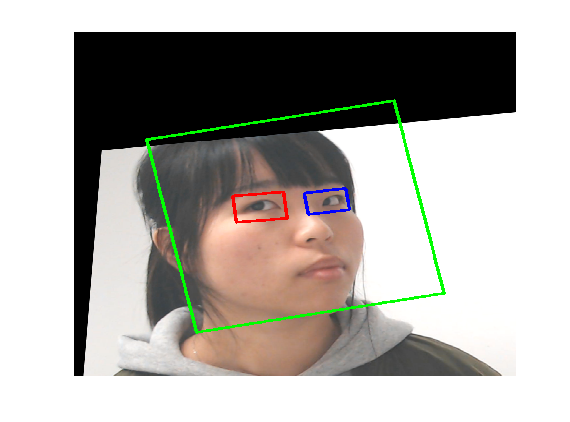

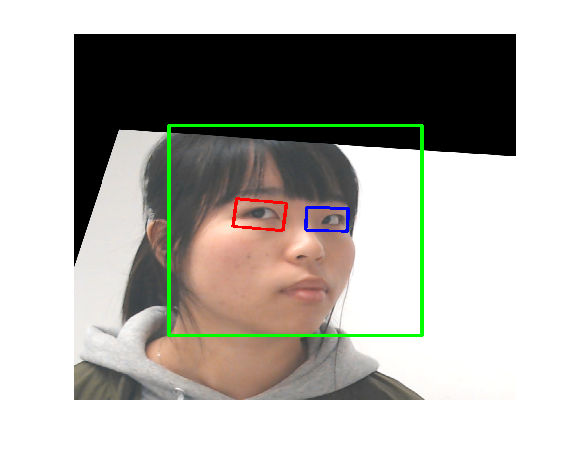

We adopt the modified data normalization method introduced in [3]. This method rotates and translates a virtual camera so that it faces towards a reference point on the face at a fixed distance and canceling out the roll angle of the head. The images are normalized by perspective warping, converted to gray scale and histogram-equalized.

We then align the images based on detected landmarks. The face images are aligned so that the center of each eye is at a fixed location in the resulting images. The eye images are aligned so that the eye corners are at fixed locations.

We define two normalization space. Normalization Space I is the coordinate where the virtual camera is rotated so that it points to the center of both eyes, but the roll angle of the face is not cancelled out. The ground truth gaze directions are assigned in this space.

Normalization Space II is the coordinate where the vitual camera is rotated so that it points to the center of both eyes and cancels out the roll of the face. Training, testing and calibration are conducted in this space.

|

|

|

| Original images | Normalization Space I | Normalization Space II |

The normalized data can be downloaded here.

Citation

If you are interested in the NISLGaze Dataset, please cite us:

@article{chen2022towards,

title={Towards High Performance Low Complexity Calibration in Appearance Based Gaze Estimation},

author={Chen, Zhaokang and Shi, Bertram},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2022},

volume={},

number={},

pages={1-1},

publisher={IEEE},

doi={10.1109/TPAMI.2022.3148386}}

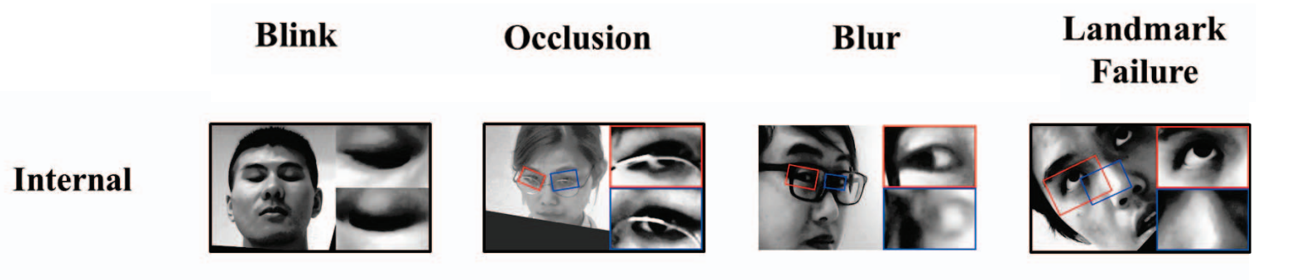

NISLGaze Outlier Dataset

NISLGaze Outlier Dataset (paper) contains a subset of aforementioned NISLGaze dataset. We manually labeled each image in NISLGaze Outlier Dataset one out of five categories: [Normal, Blink, Blur, Occlusion, Landmark Failue]. Some outlier examples are shown here:

|

| Outlier Examples |

Citation

If you are interested in the NISLGaze Outlier Dataset, please cite us:

@inproceedings{chen2019unsupervised,

title={Unsupervised outlier detection in appearance-based gaze estimation},

author={Chen, Zhaokang and Deng, Didan and Pi, Jimin and Shi, Bertram E},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops},

pages={0--0},

year={2019}

}

Reference

[1] T. Baltrusaitis, A. Zadeh, Y. C. Lim, and L.-P. Morency, “OpenFace 2.0: Facial behavior analysis toolkit,” in IEEE International Conference on Automatic Face & Gesture Recognition, 2018.

[2] J. Cech and T. Soukupova, “Real-time eye blink detection using facial landmarks,” Computer Vision Winter Workshop, 2016.

[3] X. Zhang, Y. Sugano, and A. Bulling, “Revisiting data normalization for appearance-based gaze estimation,” in Proceedings of the ACM Symposium on Eye Tracking Research & Applications, 2018.